Learn Before

Mini-Batch Gradient Descent

(Batch) Gradient Descent (Deep Learning Optimization Algorithm)

Stochastic Gradient Descent Algorithm

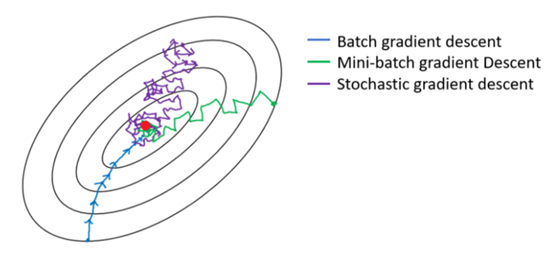

Batch vs Stochastic vs Mini-Batch Gradient Descent

Batch gradient descent (batch size = N) takes relatively low noise, relatively large steps. And you could just keep matching to the minimum. However, it may take a long time to process and need additional memory.

Stochastic gradient descent (batch size = 1) is easy to fit in memory and efficient for large datasets. But it can be extremely noisy since sometimes you hit in the wrong direction if that a training example happens to point in a bad direction. It won't ever converge, and will always just kind of oscillate and wander around the region of the minimum.

in practice, mini-batch gradient descent with batch size in between 1 and N works better. It's not guaranteed to always head toward the minimum but it tends to head more consistently in direction of the minimum.

0

2

Tags

Data Science

Related

An Example of Mini-Batches

Mini-Batch Gradient Descent Algorithm

Batch vs Stochastic vs Mini-Batch Gradient Descent

Example Using Mini-Batch Gradient Descent (Learning Rate Decay)

Mini-Batches Size

Which of these statements about mini-batch gradient descent do you agree with?

Why is the best mini-batch size usually not 1 and not m, but instead something in-between?

Suppose your learning algorithm’s cost J, plotted as a function of the number of iterations, looks like the image below:

Stochastic Gradient Descent Algorithm

Logistic regression gradient descent

Derivation of the Gradient Descent Formula

Mini-Batch Gradient Descent

Epoch in Gradient Descent

Batch vs Stochastic vs Mini-Batch Gradient Descent

Gradient Descent with Momentum

For logistic regression, the gradient is given by ∂∂θjJ(θ)=1m∑mi=1(hθ(x(i))−y(i))x(i)j. Which of these is a correct gradient descent update for logistic regression with a learning rate of α?

Suppose you have the following training set, and fit a logistic regression classifier .

Backward Propagation

Batch vs Stochastic vs Mini-Batch Gradient Descent

Adam vs. SGD vs. RMSProp vs. SWA vs. AdaTune