Learn Before

1 x 1 Convolution Layer in Neural Networks

(Network ~ Network)Inception Network (GoogLeNet)

Bottleneck Layer in Inception Network

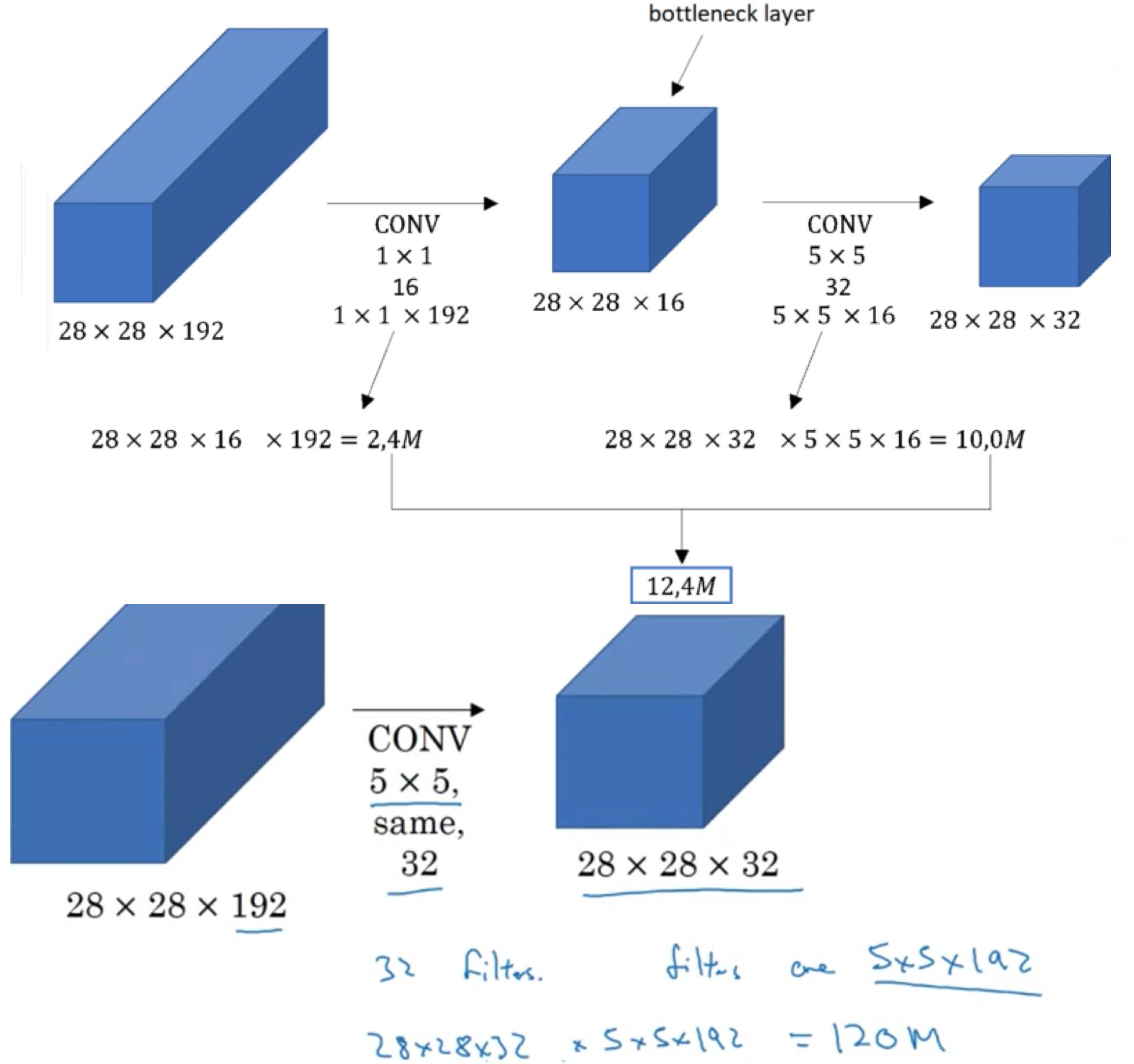

The bottleneck layer reduces the training time by diminishing the number of features and operations. By reducing the number of nodes of a newer layer in comparison to previous layers, you can reduce dimensionality.

As shown in the figure, the bottom architecture requires 120 M computations, but by adding the bottleneck layer in the middle, in the architecture shown on top, the number of computations is reduced to 12.4 M.

0

1

Contributors are:

Who are from:

Tags

Data Science

Related

Bottleneck Layer in Inception Network

1 x 1 Convolution Layer in Neural Networks

(Network ~ Network)Bottleneck Layer in Inception Network

Visual of an Inception Module in Inception Network

Auxiliary Classifiers in Inception Network

Going deeper with convolutions paper

Effect of Inception

Features of GoogLeNet

Learn After

Bottleneck layer

#017 CNN Inception Network