Learn Before

Deep Learning Algorithms

Deep Feedforward Networks (MLP = Multi-Layer Perceptrons)

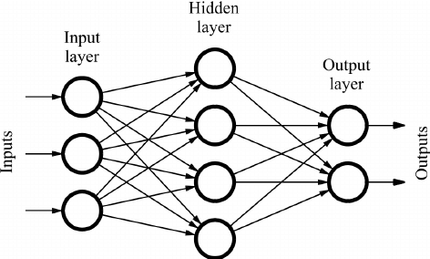

Deep feedforward networks, also called feedforward neural networks, or multilayer perceptrons (MLPs), are the quintessential deep learning models.

It was the first and simplest type of artificial neural network devised. In this network, the information moves in only one direction, forward, from the input nodes, through the hidden nodes (if any) and to the output nodes. There are no cycles or loops in the network.

0

1

Contributors are:

Who are from:

Tags

Data Science

Related

Neural Network Reference

Convolutional Neural Network (CNN)

Recurrent Neural Network (RNN)

Generative Models

Circuit Theory

More about deep learning algorithms

Deep learning train/dev/test split

Deep Feedforward Networks (MLP = Multi-Layer Perceptrons)

Deep Learning Python libraries (frameworks)

What can convolutional neural networks be used for?

Generative adversarial network(GAN)

Deep Learning Frameworks

Adversarial Examples

Optimization for Training Deep Models

Implementations of Deep Learning

Monte Carlo Methods

Learn After

Overall Structure of Deep Feedforward Networks

Stages of Feed Forward Neural Network Learning

Hyperparameters of Feedforward Neural Network

Deep Feedforward Network Cost Functions

Deep Feedfoward Network as time passed.

Symbol-to-number Differentiation

Theano and Tensorflow Approach

Symbolic Representations

Feedforward Neural Language Modeling

Feedforward Neural Network Classification in NLP