Learn Before

MTL Methods for Deep Learning

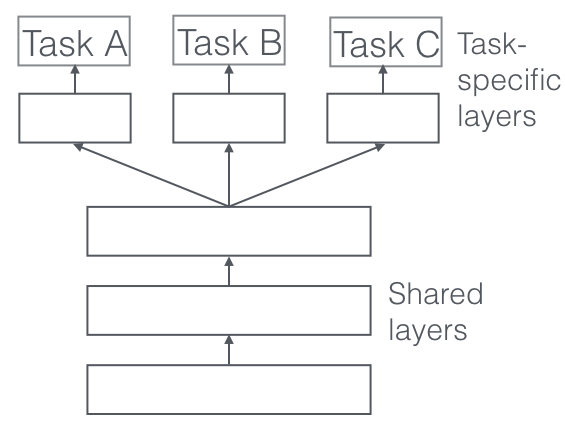

Hard Parameter Sharing

Hard parameter sharing is the most commonly used approach to MTL in neural networks. It is generally applied by sharing the hidden layers between all tasks, while keeping several task-specific output layers. Hard parameter sharing greatly reduces the risk of overfitting. In fact, the risk of overfitting the shared parameters is an order N, where N is the number of tasks, smaller than overfitting the task-specific parameters, the output layers. Intuitively, this means the more tasks we are learning simultaneously, the more our model has to find a representation that captures all of the tasks and the less is our chance of overfitting on our original task.

0

1

Tags

Data Science

Related

Hard Parameter Sharing

Soft Parameter Sharing