Learn Before

Common Performance Metrics for Classification

ROC Curve and ROC AUC

ROC stands for "receiver operating characteristics," a name derived from communications theory, the field in which ROC curves were first used.

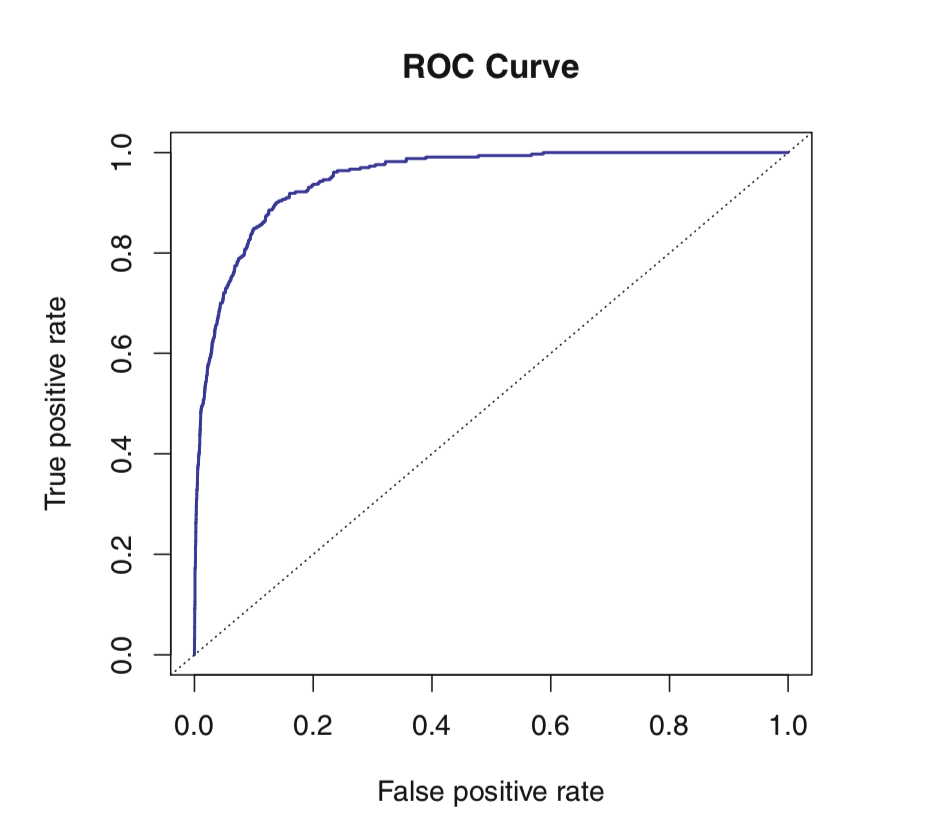

The ROC curve illustrates how false positive and true positive rates for a classifier vary as you change the threshold of the classifier. In the sample graph the threshold values are not shown, but a threshold value corresponding to a point in the upper-left of the graph would likely optimize your classifier. Such a threshold value would make your classifier predict many positive observations as positive (high true positive rate), and not predict many negative observations as positive (low false positive rate). The “steepness” of the curve maximizes true positive rate while minimizing the false positive rate

AUC simply stands for "area under curve".

Generally, higher the ROC AUC for a classifier, the better it is. This is because having more threshold values for which the true positive rate is higher than the false positive rate and having larger ratios of true positive rate to false positive rate (both good things) increases the ROC AUC. ROC AUC can take on values between 0 and 1. A value close to 1 means the classifier is quite good. A value of 0.5 means the classifier is no better than guessing randomly. The blue line on the sample graph has an AUC of 0.95 and the dotted line has an AUC of 0.5.

0

3

Contributors are:

Who are from:

Tags

Data Science

Related

Confusion Matrix

ROC Curve and ROC AUC

Precision and Recall performance metrics.

F1 Score

Optimizing Criteria in Classification Problems

Satisficing Criteria in Classification Problems

Bayes error rate

What evaluation metric would you want to maximize based on the following scenario?

Recall of a Classification Model

Precision of a Classification Model

Sensitivity Analysis of a Classification Model

Learning Curve of a Classification Model

Having three evaluation metrics makes it harder for you to quickly choose between two different algorithms, and will slow down the speed with which your team can iterate. True/False?

If you had the four following models, which one would you choose based on the following accuracy, runtime, and memory size criteria?

Coverage

How to choose between precision and recall?

F-Measure

Learn After

Given the following models and accuracy scores, match each model to its corresponding ROC curve.

Given the following models and AUC scores, match each model to its corresponding ROC curve.